As previously discussed, projector-based AR is promising display method for LayupRITE. However, there are still some downsides. In this post, we will discuss other possible AR and interactivity methods which were tested as part of the various LayupRITE projects. At the end of the post, we will put forward the chosen AR method to be taken further into LayupRITE101, the next iteration of this project.

Head-Mounted Displays – Microsoft HoloLens

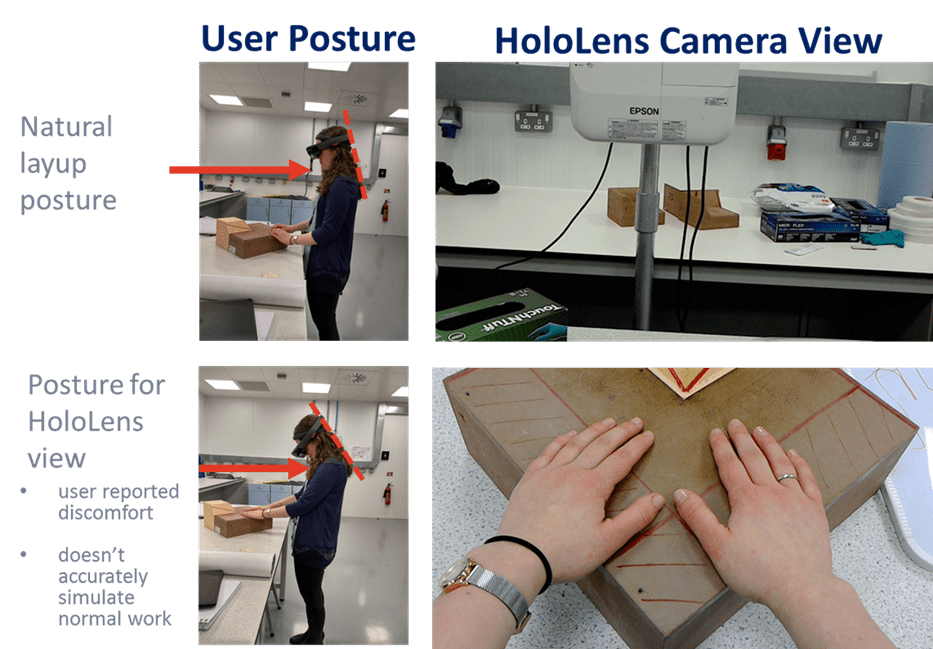

Head-mounted displays are always going to be an attractive option for augmented reality. Directly overlaying digital information onto the user’s field-of-view (FOV) doesn’t require any additional mapping to meet the user perspective and leaves the users hands free. The HoloLens adds to this by also including depth mapping in its projected “holograms” as well as hand tracking and voice commands for control/interaction. What depth mapping allows is for digital assets to be partially (or fully) “occluded” or hidden behind real-world, physical objects.

For LayupRITE, this depth mapping could be used to hide portions of the virtual net which would be on the far side of the tool from users, better mimicking the real-world scenario. The HoloLens, on paper, showed real promise for LayupRITE unfortunately practical and ergonomic concerns made it unsuitable. Hand layup of composite plies is close-in work, all occurring within arms-length. Additionally, materials are usually draped onto tools on tables in front of the operator. These two factors combined mean that the vast majority of manual layup work occurs close-in and below the user’s eyeline. To see the holograms presented by the HoloLens’ FOV the user must tilt their head down to uncomfortable angles. This poor posture coupled with the additional weight of the headset made the HoloLens totally unsuitable from an ergonomic perspective.

Some of these drawbacks, namely the FOV issues and weight distribution, have been addressed by the newer version of the HoloLens, the HoloLens 2. However, it is unclear if the updated hardware will markedly improve on the ergonomic situation. Without being able to test a variety of HMDs, it doesn’t appear they are a viable solution at the moment, although there are some promising-looking devices.

Tablet/Device – Based AR

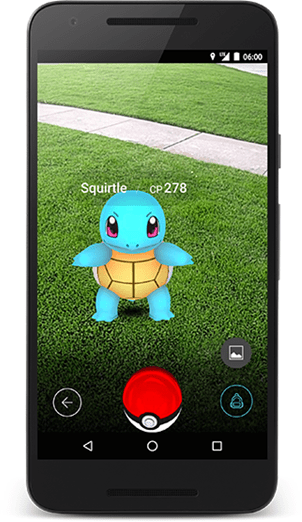

Holding a device with a screen and a rear-facing camera to put virtual/digital content over an image of the real-world is easily the most common AR method. From games such as Pokémon GO to IKEA’s Place AR app for iOS. Most of these applications lie in either the gaming or the advertisement space, but there are also industrial AR apps for assembly and manufacture.

A device with a screen and rear-facing camera allows the user to point the camera at an object, target, or space, this displays a live feed from the camera onto the screen. The application then recognises the space/target/object and displays digital content. This content can come in two forms, a 2D overlay like a HUD or scaled and oriented 3D content. The level of interactivity is mixed, either for display or information or interacting with the digital content via the screen/device, rather than interacting with it in the real-world space.

What does this mean for LayupRITE?

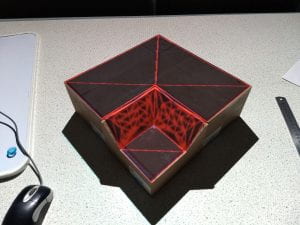

There are a variety of available display methods for LayupRITE. Projected Interactive Augmented Reality (PIAR), the method used in previous phases of LayupRITE development has a lot of benefits and is probably, when fully realised, the ideal method for display and interaction on the tool/part-in-progress. However, in its current state, particularly the complicated setup and calibration routine, it isn’t as slick or suitable as it needs to be. There have also been concerns raised about cost per system/user.

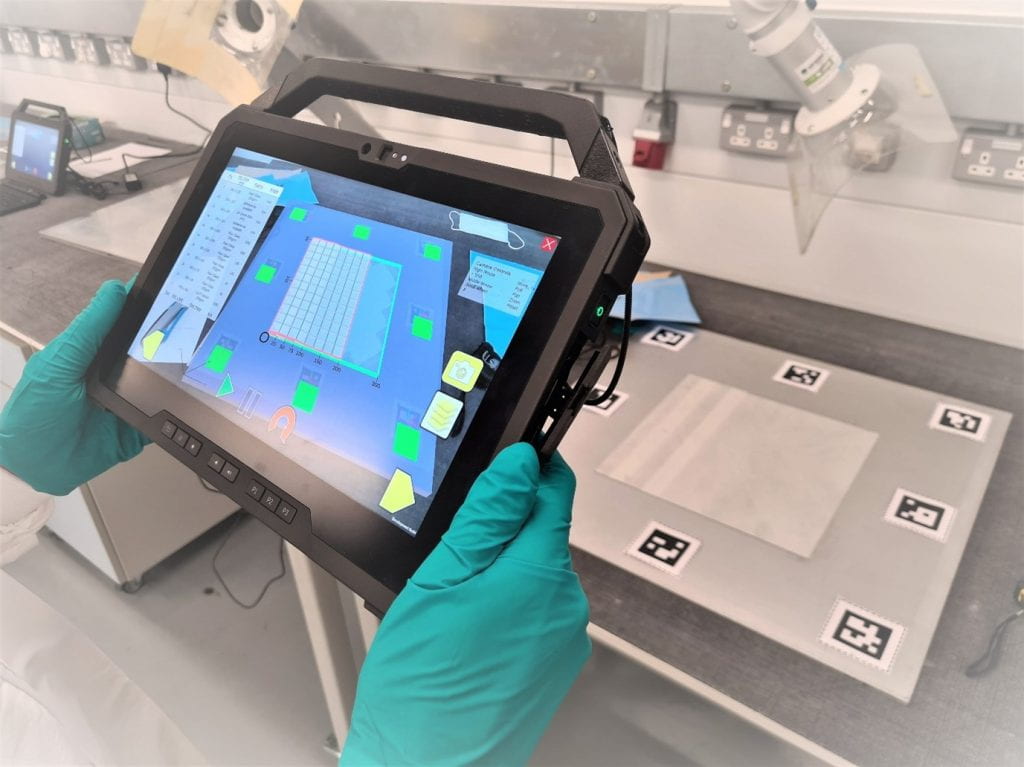

For LayupRITE101, we have moved to a device-based AR method. This currently runs on a Windows 10 tablet device with a rear-facing camera. The camera tracks ARUco markers fixed to the tool, in a similar method to the tool tracking from project 1. Using this method will remove the cost of the Kinect™ and projector, resulting in lower cost and setup at the cost of interactivity. What this will allow us to do is take a tablet and use it for both the classroom stuff and the AR lessons in the workshop. As always, the AR method will likely need refining and developing before it’s truly product

ready.

Lastly, it’s important to point out the utility of developing the drape model to work within a game engine such as Unity. The development environment lets us use prefabs to target display types (PIAR, tablet-AR, VR etc.) so we can build the software modules for multiple display types. So, whilst we’re using tablet-based AR for now, there’s nothing stopping us from developing a VR version or deploying the new and updated software onto a PIAR system in the future.